Celestia Improvement Proposal (CIP) process

Read CIP-1 for information on the CIP process.

Meetings

Celestia Improvement Proposals (CIPs)

| № | Title | Author(s) |

|---|---|---|

| 1 | Celestia Improvement Proposal Process and Guidelines | Yaz Khoury [email protected] |

| 2 | CIP Editor Handbook | Yaz Khoury (@YazzyYaz) |

| 3 | Process for Approving External Resources | Yaz Khoury (@YazzyYaz) |

| 4 | Standardize data expiry time for pruned nodes | Mustafa Al-Bassam (@musalbas), Rene Lubov (@renaynay), Ramin Keene (@ramin) |

| 5 | Rename data availability to data publication | msfew (@fewwwww), Kartin, Xiaohang Yu (@xhyumiracle) |

| 6 | Enforce payment of the gas for a transaction based on a global minimum price | Callum Waters (@cmwaters) |

| 7 | Managing Working Groups in the Celestia Improvement Proposal Process | Yaz Khoury [email protected] |

| 8 | Roles and Responsibilities of Working Group Chairs in the CIP Process | Yaz Khoury [email protected] |

| 9 | Packet Forward Middleware | Alex Cheng (@akc2267) |

| 10 | Coordinated network upgrades | Callum Waters (@cmwaters) |

| 11 | Refund unspent gas | Rootul Patel (@rootulp) |

| 12 | ICS-29 Relayer Incentivisation Middleware | Susannah Evans [email protected] (@womensrights), Aditya Sripal [email protected] (@AdityaSripal) |

| 13 | On-chain Governance Parameters for Celestia Network | Yaz Khoury [email protected], Evan Forbes [email protected] |

| 14 | ICS-27 Interchain Accounts | Susannah Evans [email protected] (@womensrights), Aidan Salzmann [email protected] (@asalzmann), Sam Pochyly [email protected] (@sampocs) |

| 15 | Discourage memo usage | Rootul Patel (@rootulp), NashQueue (@nashqueue) |

| 16 | Make Security Related Governance Parameters Immutable | Mingpei CAO (@caomingpei) |

| 17 | Lemongrass Network Upgrade | Evan Forbes (@evan-forbes) |

| 18 | Standardised Gas and Pricing Estimation Interface | Callum Waters (@cmwaters) |

| 19 | Shwap Protocol | Hlib Kanunnikov (@Wondertan) |

| 20 | Disable Blobstream module | Rootul Patel (@rootulp) |

| 21 | Introduce blob type with verified signer | Callum Waters (@cmwaters) |

| 22 | Removing the IndexWrapper | NashQueue (@Nashqueue) |

| 23 | Coordinated prevote times | Callum Waters (@cmwaters) |

| 24 | Versioned Gas Scheduler Variable | Nina Barbakadze (@ninabarbakadze) |

| 25 | Ginger Network Upgrade | Josh Stein (@jcstein), Nina Barbakadze (@ninabarbakadze) |

| 26 | Versioned timeouts | Josh Stein (@jcstein), Rootul Patel (@rootulp), Sanaz Taheri (@staheri14 |

| 27 | Block limits for number of PFBs and non-PFBs | Josh Stein (@jcstein), Nina Barbakadze (@ninabarbakadze), rach-id (@rach-id), Rootul Patel (@rootulp) |

| 28 | Transaction size limit | Josh Stein (@jcstein), Nina Barbakadze (@ninabarbakadze), Rootul Patel (@rootulp) |

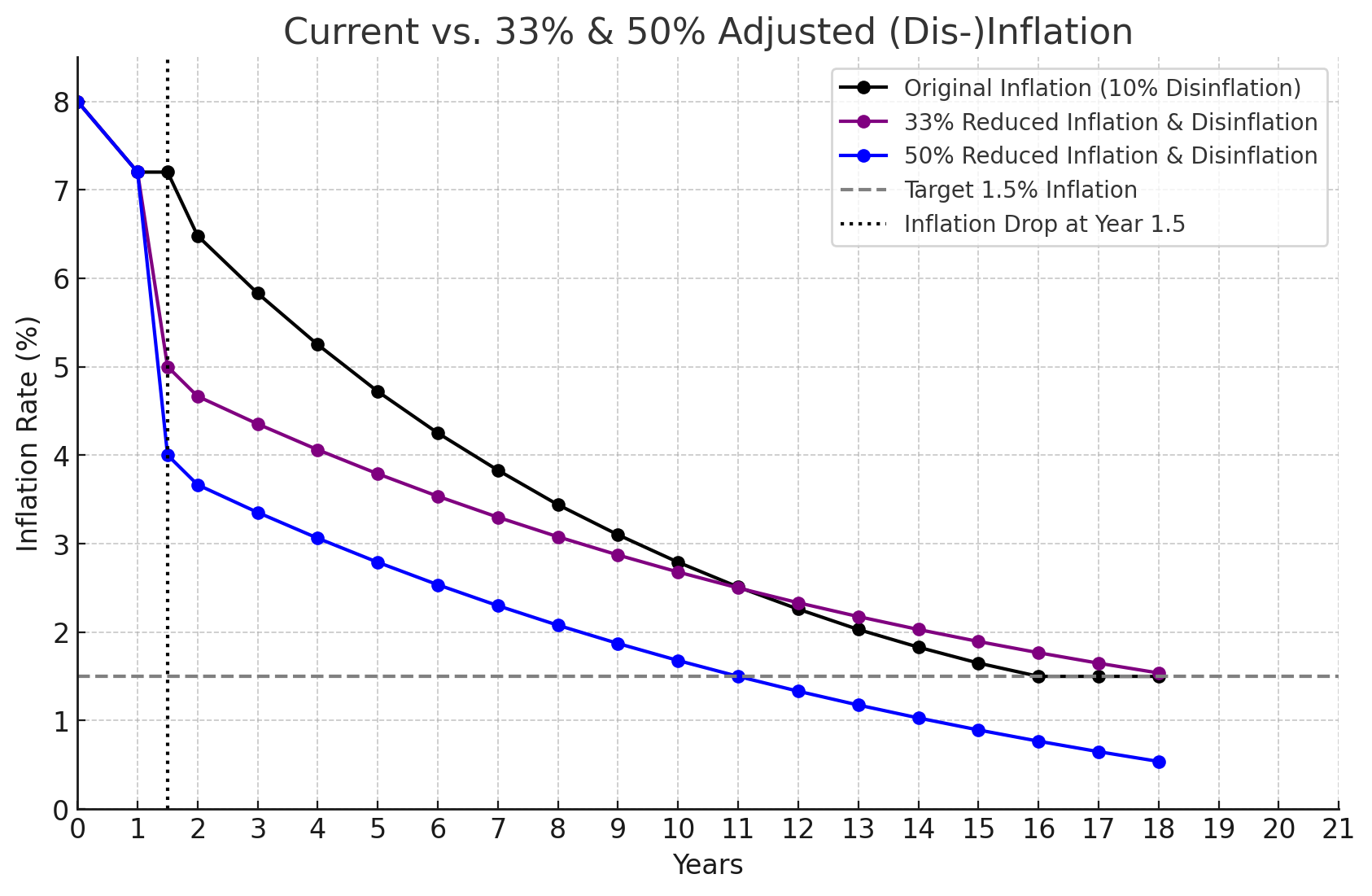

| 29 | Decrease Inflation and Adjust Disinflation | Dean Eigenmann (@decanus), Marko Baricevic (@tac0turtle) |

| 30 | Prevent Auto-Claiming of Staking Rewards | Dean Eigenmann (@decanus), Marko Baricevic (@tac0turtle) |

Contributing

Files in this repo must conform to markdownlint. Install markdownlint and then run:

markdownlint --config .markdownlint.yaml '**/*.md'

Running the site locally

Prerequisites:

mdbook serve -o

| cip | 1 |

|---|---|

| title | Celestia Improvement Proposal Process and Guidelines |

| author | Yaz Khoury [email protected] |

| status | Living |

| type | Meta |

| created | 2023-04-13 |

Table of Contents

- What is a CIP?

- CIP Rationale

- CIP Types

- CIP Work Flow

- Shepherding a CIP

- Core CIPs

- CIP Process

- What belongs in a successful CIP?

- CIP Formats and Templates

- CIP Header Preamble

- author header

- discussions-to header

- type header

- category header

- created header

- requires header

- Linking to External Resources

- Data Availability Specifications

- Consensus Layer Specifications

- Networking Specifications

- Digital Object Identifier System

- Linking to other CIPs

- Auxiliary Files

- Transferring CIP Ownership

- CIP Editors

- CIP Editor Responsibilities

- Style Guide

- Titles

- Descriptions

- CIP numbers

- RFC 2119 and RFC 8174

- History

- Copyright

What is a CIP

CIP stands for Celestia Improvement Proposal. A CIP is a design document providing information to the Celestia community, or describing a new feature for Celestia or its processes or environment. The CIP should provide a concise technical specification of the feature and a rationale for the feature. The CIP author is responsible for building consensus within the community and documenting dissenting opinions.

CIP Rationale

We intend CIPs to be the primary mechanisms for proposing new features, for collecting community technical input on an issue, and for documenting the design decisions that have gone into Celestia. Because the CIPs are maintained as text files in a versioned repository, their revision history is the historical record of the feature proposal.

For Celestia software clients and core devs, CIPs are a convenient way to track the progress of their implementation. Ideally, each implementation maintainer would list the CIPs that they have implemented. This will give end users a convenient way to know the current status of a given implementation or library.

CIP Types

There are three types of CIP:

- Standards Track CIP describes any change that affects

most or all Celestia implementations, such as a change to

the network protocol, a change in block or transaction

validity rules, proposed standards/conventions, or any

change or addition that affects the interoperability of

execution environments and rollups using Celestia. Standards

Track CIPs consist of three parts: a design document,

an implementation, and (if warranted) an update to the

formal specification. Furthermore, Standards Track CIPs

can be broken down into the following categories:

- Core: improvements requiring a consensus fork, as well as changes that are not necessarily consensus critical but may be relevant to “core dev” discussions (for example, validator/node strategy changes).

- Data Availability: improvements to the Data Availability layer that while not consensus breaking, would be relevant for nodes to upgrade to after.

- Networking: includes improvements around libp2p and the p2p layer in general.

- Interface: includes improvements around consensus and data availability client API/RPC specifications and standards, and also certain language-level standards like method names. The label “interface” aligns with the client repository and discussion should primarily occur in that repository before a CIP is submitted to the CIPs repository.

- CRC: Rollup standards and conventions, including standards for rollups such as token standards, name registries, URI schemes, library/package formats, and wallet formats that rely on the data availability layer for transaction submission to the Celestia Network.

- Meta CIP describes a process surrounding Celestia or proposes a change to (or an event in) a process. Meta CIPs are like Standards Track CIPs but apply to areas other than the Celestia protocol itself. They may propose an implementation, but not to Celestia’s codebase; they often require community consensus; unlike Informational CIPs, they are more than recommendations, and users are typically not free to ignore them. Examples include procedures, guidelines, changes to the decision-making process, and changes to the tools or environment used in Celestia development.

- Informational CIP describes a Celestia design issue, or provides general guidelines or information to the Celestia community, but does not propose a new feature. Informational CIPs do not necessarily represent Celestia community consensus or a recommendation, so users and implementers are free to ignore Informational CIPs or follow their advice.

It is highly recommended that a single CIP contain a single key proposal or new idea. The more focused the CIP, the more successful it tends to be. A change to one client doesn’t require a CIP; a change that affects multiple clients, or defines a standard for multiple apps to use, does.

A CIP must meet certain minimum criteria. It must be a clear and complete description of the proposed enhancement. The enhancement must represent a net improvement. The proposed implementation, if applicable, must be solid and must not complicate the protocol unduly.

Celestia Improvement Proposal (CIP) Workflow

Shepherding a CIP

Parties involved in the process are you, the champion or CIP author, the CIP editors, and the Celestia Core Developers.

Before diving into writing a formal CIP, make sure your idea stands out. Consult the Celestia community to ensure your idea is original, saving precious time by avoiding duplication. We highly recommend opening a discussion thread on the Celestia forum for this purpose.

Once your idea passes the vetting process, your next responsibility is to present the idea via a CIP to reviewers and all interested parties. Invite editors, developers, and the community to give their valuable feedback through the relevant channels. Assess whether the interest in your CIP matches the work involved in implementing it and the number of parties required to adopt it. For instance, implementing a Core CIP demands considerably more effort than a CRC, necessitating adequate interest from Celestia client teams. Be aware that negative community feedback may hinder your CIP’s progression beyond the Draft stage.

Core CIPs

For Core CIPs, you’ll need to either provide a client implementation or persuade clients to implement your CIP, given that client implementations are mandatory for Core CIPs to reach the Final stage (see “CIP Process” below).

To effectively present your CIP to client implementers, request a Celestia CoreDevsCall (CDC) call by posting a comment linking your CIP on a CoreDevsCall agenda GitHub Issue.

The CoreDevsCall allows client implementers to:

- Discuss the technical merits of CIPs

- Gauge which CIPs other clients will be implementing

- Coordinate CIP implementation for network upgrades

These calls generally lead to a “rough consensus” on which CIPs should be implemented. Rough Consensus is informed based on the IETF’s RFC 7282 which is a helpful document to understand how decisions are made in Celestia CoreDevCalls. This consensus assumes that CIPs are not contentious enough to cause a network split and are technically sound. One important excerpt from the document that highlights based on Dave Clark’s 1992 presentation is the following:

We reject: kings, presidents and voting. We believe in: rough consensus and running code.

:warning: The burden falls on client implementers to estimate community sentiment, obstructing the technical coordination function of CIPs and AllCoreDevs calls. As a CIP shepherd, you can facilitate building community consensus by ensuring the Celestia forum thread for your CIP encompasses as much of the community discussion as possible and represents various stakeholders.

In a nutshell, your role as a champion involves writing the CIP using the style and format described below, guiding discussions in appropriate forums, and fostering community consensus around the idea.

CIP Process

The standardization process for all CIPs in all tracks follows the below status:

- Idea: A pre-draft idea not tracked within the CIP Repository.

- Draft: The first formally tracked stage of a CIP in development.

A CIP is merged by a CIP Editor into the CIP repository when properly

formatted.

- ➡️ Draft: If agreeable, CIP editor will assign the CIP a number (generally the issue or PR number related to the CIP) and merge your pull request. The CIP editor will not unreasonably deny a CIP.

- ❌ Draft: Reasons for denying Draft status include being too unfocused, too broad, duplication of effort, being technically unsound, not providing proper motivation or addressing backwards compatibility, or not in keeping with the Celestia values and code of conduct.

- Review: A CIP Author marks a CIP as ready for and requesting Peer Review.

- Last Call: The final review window for a CIP before moving to

Final. A CIP editor assigns Last Call status and sets a review end

date (last-call-deadline), typically 14 days later.

- ❌ Review: A Last Call which results in material changes or substantial unaddressed technical complaints will cause the CIP to revert to Review.

- ✅ Final: A successful Last Call without material changes or unaddressed technical complaints will become Final.

- Final: This CIP represents the final standard. A Final CIP exists in a state of finality and should only be updated to correct errata and add non-normative clarifications. A PR moving a CIP from Last Call to Final should contain no changes other than the status update. Any content or editorial proposed change should be separate from this status-updating PR and committed prior to it.

Other Statuses

- Stagnant: Any CIP in Draft, Review, or Last Call that remains inactive for 6 months or more is moved to Stagnant. Authors or CIP Editors can resurrect a proposal from this state by moving it back to Draft or its earlier status. If not resurrected, a proposal may stay forever in this status.

- Withdrawn: The CIP Author(s) have withdrawn the proposed CIP. This state has finality and can no longer be resurrected using this CIP number. If the idea is pursued at a later date, it is considered a new proposal.

- Living: A special status for CIPs designed to be continually updated and not reach a state of finality. This status caters to dynamic CIPs that require ongoing updates.

As you embark on this exciting journey of shaping Celestia’s future with your valuable ideas, remember that your contributions matter. Your technical knowledge, creativity, and ability to bring people together will ensure that the CIP process remains engaging, efficient, and successful in fostering a thriving ecosystem for Celestia.

What belongs in a successful CIP

A successful Celestia Improvement Proposal (CIP) should consist of the following parts:

- Preamble: RFC 822 style headers containing metadata about the CIP, including the CIP number, a short descriptive title (limited to a maximum of 44 words), a description (limited to a maximum of 140 words), and the author details. Regardless of the category, the title and description should not include the CIP number. See below for details.

- Abstract: A multi-sentence (short paragraph) technical summary that provides a terse and human-readable version of the specification section. By reading the abstract alone, someone should be able to grasp the essence of what the proposal entails.

- Motivation (optional): A motivation section is crucial for CIPs that seek to change the Celestia protocol. It should clearly explain why the existing protocol specification is insufficient for addressing the problem the CIP solves. If the motivation is evident, this section can be omitted.

- Specification: The technical specification should describe the syntax and semantics of any new feature. The specification should be detailed enough to enable competing, interoperable implementations for any of the current Celestia platforms.

- Parameters: Summary of any parameters introduced by or changed by the CIP.

- Rationale: The rationale elaborates on the specification by explaining the reasoning behind the design and the choices made during the design process. It should discuss alternative designs that were considered and any related work. The rationale should address important objections or concerns raised during discussions around the CIP.

- Backwards Compatibility (optional): For CIPs introducing backwards incompatibilities, this section must describe these incompatibilities and their consequences. The CIP must explain how the author proposes to handle these incompatibilities. If the proposal does not introduce any backwards incompatibilities, this section can be omitted.

- Test Cases (optional): Test cases are mandatory for CIPs affecting

consensus changes. They should either be inlined in the CIP as data (such

as input/expected output pairs) or included in

../assets/cip-###/<filename>. This section can be omitted for non-Core proposals. - Reference Implementation (optional): This optional section contains a reference/example implementation that people can use to better understand or implement the specification. This section can be omitted for all CIPs ( mandatory for Core CIPs to reach the Final stage).

- Security Considerations: All CIPs must include a section discussing relevant security implications and considerations. This section should provide information critical for security discussions, expose risks, and be used throughout the proposal’s life-cycle. Examples include security-relevant design decisions, concerns, significant discussions, implementation-specific guidance, pitfalls, an outline of threats and risks, and how they are addressed. CIP submissions lacking a “Security Considerations” section will be rejected. A CIP cannot reach “Final” status without a Security Considerations discussion deemed sufficient by the reviewers.

- Copyright Waiver: All CIPs must be in the public domain. The copyright waiver MUST link to the license file and use the following wording: Copyright and related rights waived via CC0.

CIP Formats and Templates

CIPs should be written in markdown format. There is a CIP template to follow.

CIP Header Preamble

Each CIP must begin with an RFC 822 style header preamble in a markdown table. In order to display on the CIP site, the frontmatter must be formatted in a markdown table. The headers must appear in the following order:

cip: CIP number (this is determined by the CIP editor)title: The CIP title is a few words, not a complete sentencedescription: Description is one full (short) sentenceauthor: The list of the author’s or authors’ name(s) and/or username(s), or name(s) and email(s). Details are below.discussions-to: The url pointing to the official discussion threadstatus: Draft, Review, Last Call, Final, Stagnant, Withdrawn, Livinglast-call-deadline: The date last call period ends on (Optional field, only needed when status is Last Call)type: One of Standards Track, Meta, or Informationalcategory: One of Core, Data Availability, Networking, Interface, or CRC (Optional field, only needed for Standards Track CIPs)created: Date the CIP was created onrequires: CIP number(s) (Optional field)withdrawal-reason: A sentence explaining why the CIP was withdrawn. (Optional field, only needed when status is Withdrawn)

Headers that permit lists must separate elements with commas.

Headers requiring dates will always do so in the format of ISO 8601 (yyyy-mm-dd).

author header

The author header lists the names, email addresses or usernames of the

authors/owners of the CIP. Those who prefer anonymity may use a username

only, or a first name and a username. The format of the author header

value must be:

Random J. User <[email protected]>

or

Random J. User (@username)

or

Random J. User (@username <[email protected]>

if the email address and/or GitHub username is included, and

Random J. User

if neither the email address nor the GitHub username are given.

At least one author must use a GitHub username, in order to get notified on change requests and have the capability to approve or reject them.

discussions-to header

While an CIP is a draft, a discussions-to header will indicate

the URL where the CIP is being discussed.

The preferred discussion URL is a topic on Celestia Forums. The URL cannot point to Github pull requests, any URL which is ephemeral, and any URL which can get locked over time (i.e. Reddit topics).

type header

The type header specifies the type of CIP: Standards Track,

Meta, or Informational. If the track is Standards please include

the subcategory (core, networking, interface, or CRC).

category header

The category header specifies the CIP’s category. This is

required for standards-track CIPs only.

created header

The created header records the date that the CIP was

assigned a number. Both headers should be in yyyy-mm-dd

format, e.g. 2001-08-14.

requires header

CIPs may have a requires header, indicating the CIP

numbers that this CIP depends on. If such a dependency

exists, this field is required.

A requires dependency is created when the current CIP

cannot be understood or implemented without a concept or

technical element from another CIP. Merely mentioning another

CIP does not necessarily create such a dependency.

Linking to External Resources

Other than the specific exceptions listed below, links to external resources SHOULD NOT be included. External resources may disappear, move, or change unexpectedly.

The process governing permitted external resources is described in CIP-3.

Data Availability Client Specifications

Links to the Celestia Data Availability Client Specifications may be included using normal markdown syntax, such as:

[Celestia Data Availability Client Specifications](https://github.com/celestiaorg/celestia-specs)

Which renders to:

Celestia Data Availability Client Specifications

Consensus Layer Specifications

Links to specific commits of files within the Celestia Consensus Layer Specifications may be included using normal markdown syntax, such as:

[Celestia Consensus Layer Client Specifications](https://github.com/celestiaorg/celestia-specs)

Which renders to:

Celestia Consensus Layer Client Specifications

Networking Specifications

Links to specific commits of files within the Celestia Networking Specifications may be included using normal markdown syntax, such as:

[Celestia P2P Layer Specifications](https://github.com/celestiaorg/celestia-specs)

Which renders as:

Celestia P2P Layer Specifications

Digital Object Identifier System

Links qualified with a Digital Object Identifier (DOI) may be included using the following syntax:

This is a sentence with a footnote.[^1]

[^1]:

```csl-json

{

"type": "article",

"id": 1,

"author": [

{

"family": "Khoury",

"given": "Yaz"

}

],

"DOI": "00.0000/a00000-000-0000-y",

"title": "An Awesome Article",

"original-date": {

"date-parts": [

[2022, 12, 31]

]

},

"URL": "https://sly-hub.invalid/00.0000/a00000-000-0000-y",

"custom": {

"additional-urls": [

"https://example.com/an-interesting-article.pdf"

]

}

}

```

Which renders to:

This is a sentence with a footnote.1

{

"type": "article",

"id": 1,

"author": [

{

"family": "Khoury",

"given": "Yaz"

}

],

"DOI": "00.0000/a00000-000-0000-y",

"title": "An Awesome Article",

"original-date": {

"date-parts": [

[2022, 12, 31]

]

},

"URL": "https://sly-hub.invalid/00.0000/a00000-000-0000-y",

"custom": {

"additional-urls": [

"https://example.com/an-interesting-article.pdf"

]

}

}

See the Citation Style Language Schema for the supported fields. In addition to passing validation against that schema, references must include a DOI and at least one URL.

The top-level URL field must resolve to a copy of the referenced

document which can be viewed at zero cost. Values under

additional-urls must also resolve to a copy of the

referenced document, but may charge a fee.

Linking to other CIPs

References to other CIPs should follow the format CIP-N

where N is the CIP number you are referring to. Each CIP

that is referenced in an CIP MUST be accompanied by a

relative markdown link the first time it is referenced, and

MAY be accompanied by a link on subsequent references.

The link MUST always be done via relative paths so that

the links work in this GitHub repository, forks of this repository,

the main CIPs site, mirrors of the main CIP site, etc.

For example, you would link to this CIP as ./cip-1.md.

Auxiliary Files

Images, diagrams and auxiliary files should be included in a

subdirectory of the assets folder for that CIP as follows:

assets/cip-N (where N is to be replaced with the CIP

number). When linking to an image in the CIP, use relative

links such as ../assets/cip-1/image.png.

Transferring CIP Ownership

It occasionally becomes necessary to transfer ownership of CIPs to a new champion. In general, we’d like to retain the original author as a co-author of the transferred CIP, but that’s really up to the original author. A good reason to transfer ownership is because the original author no longer has the time or interest in updating it or following through with the CIP process, or has fallen off the face of the ’net (i.e. is unreachable or isn’t responding to email). A bad reason to transfer ownership is because you don’t agree with the direction of the CIP. We try to build consensus around an CIP, but if that’s not possible, you can always submit a competing CIP.

If you are interested in assuming ownership of an CIP, send a message asking to take over, addressed to both the original author and the CIP editor. If the original author doesn’t respond to the email in a timely manner, the CIP editor will make a unilateral decision (it’s not like such decisions can’t be reversed :)).

CIP Editors

The current CIP editors are

Emeritus EIP editors are

- Yaz Khoury (@YazzyYaz)

If you would like to become a CIP editor, please check CIP-2.

CIP Editor Responsibilities

For each new CIP that comes in, an editor does the following:

- Read the CIP to check if it is ready: sound and complete. The ideas must make technical sense, even if they don’t seem likely to get to final status.

- The title should accurately describe the content.

- Check the CIP for language (spelling, grammar, sentence structure, etc.), markup (GitHub flavored Markdown), code style

If the CIP isn’t ready, the editor will send it back to the author for revision, with specific instructions.

Once the CIP is ready for the repository, the CIP editor will:

- Assign an CIP number (generally the next unused CIP number, but the decision is with the editors)

- Merge the corresponding pull request

- Send a message back to the CIP author with the next step.

Many CIPs are written and maintained by developers with write access to the Celestia codebase. The CIP editors monitor CIP changes, and correct any structure, grammar, spelling, or markup mistakes we see.

The editors don’t pass judgment on CIPs. We merely do the administrative & editorial part.

Style Guide

Titles

The title field in the preamble:

- Should not include the word “standard” or any variation thereof; and

- Should not include the CIP’s number.

Descriptions

The description field in the preamble:

- Should not include the word “standard” or any variation thereof; and

- Should not include the CIP’s number.

CIP numbers

When referring to an CIP with a category of CRC, it must be written

in the hyphenated form CRC-X where X is that CIP’s assigned number.

When referring to CIPs with any other category, it must be written in

the hyphenated form CIP-X where X is that CIP’s assigned number.

RFC 2119 and RFC 8174

CIPs are encouraged to follow RFC 2119 and RFC 8174 for terminology and to insert the following at the beginning of the Specification section:

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

History

This document was derived heavily from Ethereum’s EIP Process written by Hudson Jameson which is derived from Bitcoin’s BIP-0001 written by Amir Taaki which in turn was derived from Python’s PEP-0001. In many places text was simply copied and modified. Although the PEP-0001 text was written by Barry Warsaw, Jeremy Hylton, and David Goodger, they are not responsible for its use in the Celestia Improvement Process, and should not be bothered with technical questions specific to Celestia or the CIP. Please direct all comments to the CIP editors.

Copyright

Copyright and related rights waived via CC0.

| cip | 2 |

|---|---|

| title | CIP Editor Handbook |

| description | Handy reference for CIP editors and those who want to become one |

| author | Yaz Khoury (@YazzyYaz) |

| discussions-to | https://forum.celestia.org |

| status | Draft |

| type | Informational |

| created | 2023-04-13 |

| requires | CIP-1 |

Abstract

CIP stands for Celestia Improvement Proposal. A CIP is a design document providing information to the Celestia community, or describing a new feature for Celestia or its processes or environment. The CIP should provide a concise technical specification of the feature and a rationale for the feature. The CIP author is responsible for building consensus within the community and documenting dissenting opinions.

This CIP describes the recommended process for becoming an CIP editor.

Specification

Application and Onboarding Process

Anyone having a good understanding of the CIP standardization and network upgrade process, intermediate level experience on the core side of the Celestia blockchain, and willingness to contribute to the process management may apply to become a CIP editor. Potential CIP editors should have the following skills:

- Good communication skills

- Ability to handle contentious discourse

- 1-5 spare hours per week

- Ability to understand “rough consensus”

The best available resource to understand the CIP process is CIP-1. Anyone desirous of becoming an CIP editor MUST understand this document. Afterwards, participating in the CIP process by commenting on and suggesting improvements to PRs and issues will familliarize the procedure, and is recommended. The contributions of newer editors should be monitored by other CIP editors.

Anyone meeting the above requirements may make a pull request adding themselves as an CIP editor and adding themselves to the editor list in CIP-1. If every existing CIP editor approves, the author becomes a full CIP editor. This should notify the editor of relevant new proposals submitted in the CIPs repository, and they should review and merge those pull requests.

Copyright

Copyright and related rights waived via CC0.

| cip | 3 |

|---|---|

| title | Process for Approving External Resources |

| description | Requirements and process for allowing new origins of external resources |

| author | Yaz Khoury (@YazzyYaz) |

| discussions-to | https://forum.celestia.org |

| status | Draft |

| type | Meta |

| created | 2023-04-13 |

| requires | CIP-1 |

Abstract

Celestia improvement proposals (CIPs) occasionally link to resources external to this repository. This document sets out the requirements for origins that may be linked to, and the process for approving a new origin.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

Definitions

- Link: Any method of referring to a resource, including: markdown links, anchor tags (

<a>), images, citations of books/journals, and any other method of referencing content not in the current resource. - Resource: A web page, document, article, file, book, or other media that contains content.

- Origin: A publisher/chronicler of resources, like a standards body (eg. w3c) or a system of referring to documents (eg. Digital Object Identifier System).

Requirements for Origins

Permissible origins MUST provide a method of uniquely identifying a particular revision of a resource. Examples of such methods may include git commit hashes, version numbers, or publication dates.

Permissible origins MUST have a proven history of availability. A origin existing for at least ten years and reliably serving resources would be sufficient—but not necessary—to satisfy this requirement.

Permissible origins MUST NOT charge a fee for accessing resources.

Origin Removal

Any approved origin that ceases to satisfy the above requirements MUST be removed from CIP-1. If a removed origin later satisfies the requirements again, it MAY be re-approved by following the process described in Origin Approval.

Finalized CIPs (eg. those in the Final or Withdrawn statuses) SHOULD NOT be updated to remove links to these origins.

Non-Finalized CIPs MUST remove links to these origins before changing statuses.

Origin Approval

Should the editors determine that an origin meets the requirements above, CIP-1 MUST be updated to include:

- The name of the allowed origin;

- The permitted markup and formatting required when referring to resources from the origin; and

- A fully rendered example of what a link should look like.

Rationale

Unique Identifiers

If it is impossible to uniquely identify a version of a resource, it becomes impractical to track changes, which makes it difficult to ensure immutability.

Availability

If it is possible to implement a standard without a linked resource, then the linked resource is unnecessary. If it is impossible to implement a standard without a linked resource, then that resource must be available for implementers.

Free Access

The Celestia ecosystem is built on openness and free access, and the CIP process should follow those principles.

Copyright

Copyright and related rights waived via CC0.

| cip | 4 |

|---|---|

| title | Standardize data expiry time for pruned nodes |

| description | Standardize default data expiry time for pruned nodes to 30 days + 1 hour worth of seconds (2595600 seconds). |

| author | Mustafa Al-Bassam (@musalbas), Rene Lubov (@renaynay), Ramin Keene (@ramin) |

| discussions-to | https://forum.celestia.org/t/cip-standardize-data-expiry-time-for-pruned-nodes/1326 |

| status | Final |

| type | Standards Track |

| category | Data Availability |

| created | 2023-11-23 |

Abstract

This CIP standardizes the default expiry time of historical blocks for pruned (non-archival) nodes to 30 days + 1 hour worth of seconds (2595600 seconds).

Motivation

The purpose of data availability layers such as Celestia is to ensure that block data is provably published to the Internet, so that applications and rollups can know what the state of their chain is, and store that data. Once the data is published, data availability layers do not inherently guarantee that historical data will be permanently stored and remain retrievable. This task is left to block archival nodes on the network, which may be ran by professional service providers.

Block archival nodes are nodes that store a full copy of the historical chain, whereas pruned nodes store only the latest blocks. Consensus nodes running Tendermint are able to prune blocks by specifying a min-retain-blocks parameter in their configuration. Data availability nodes running celestia-node will also soon have the ability to prune blocks.

It is useful to standardize a default expiry time for blocks for pruned nodes, so that:

- Rollups and applications have an expectation of how long data will be retrievable from pruned nodes before it can only be retrieved from block archival nodes.

- Light nodes that want to query data in namespaces can discover pruned nodes over the peer-to-peer network and know which blocks they likely have, versus non-pruned nodes.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

Nodes that prune block data SHOULD store and distribute data in blocks that were created in the last 30 days + 1 hour worth of seconds (2595600 seconds). The additional 1 hour acts as a buffer to account for clock drift.

On the Celestia data availability network, both pruned and non-pruned nodes MAY advertise themselves under the existing full peer discovery tag, in which case the nodes MUST store and distribute data in blocks that were created in the last 30 days + 1 hour worth of seconds (2595600 seconds).

Non-pruned nodes MAY advertise themselves under a new archival tag, in which case the nodes MUST store and distribute data in all blocks.

Data availability sampling light nodes SHOULD sample blocks created in the last 30 days worth of seconds (the sampling window of 2592000 seconds).

Definitions

Sampling Window - the period within which light nodes should sample blocks, specified at 30 days worth of seconds.

Pruning Window - the period within which both pruned and non-pruned full storage nodes must store and distribute data in blocks, specified at 30 days + 1 hour worth of seconds.

Rationale

30 days worth of seconds (2592000 seconds) is chosen for the following reasons:

- Data availability sampling light nodes need to at least sample data within the Tendermint weak subjectivity period of 21 days in order to independently verify the data availability of the chain, and so they need to be able to sample data up to at least 21 days old.

- 30 days worth of seconds (2592000 seconds) ought to be a reasonable amount of time for data to be downloaded from the chain by any application that needs it.

Backwards Compatibility

The implementation of pruned nodes will break backwards compatibility in a few ways:

- Light nodes running on older software (without the sampling window) will not be able to sample historical data (blocks older than 30 days) as nodes advertising on the

fulltag will no longer be expected to provide historical blocks. - Similarly, full nodes running on older software will not be able to sync historical blocks without discovering non-pruned nodes on the

archivaltag. - Requesting blobs from historical blocks via a light node or full node will not be possible without discovering non-pruned nodes on the

archivaltag.

Reference Implementation

Data Availability Sampling Window (light nodes)

Implementation for light nodes can be quite simple, where a satisfactory implementation merely behaves in that the choice to sample headers should not occur for headers whose timestamp is outside the given sampling window.

Given a hypothetical “sample” function that performs data availability sampling of incoming extended headers from the network, the decision to sample or not should be taken by inspecting the header’s timestamp, and ignoring it in any sampling operation if the duration between the header’s timestamp and the current time exceeds the duration of the sampling window. For example:

const windowSize = time.Second * 86400 * 30 // 30 days worth of seconds (2592000 seconds)

func sample(header Header) error{

if time.Since(header.Time()) > windowSize {

return nil // do not perform any sampling

}

// continue with rest of sampling operation

}

Example implementation by celestia node

Storage Pruning

Pruning of data outside the availability window will be highly implementation specific and dependent on how data storage is engineered.

A satisfactory implementation would be where any node implementing storage pruning may, if NOT advertising oneself to peers as an archival node on the ‘full’ topic, discard stored data outside the 30 day + 1 hour worth of seconds (2595600 seconds) availability window. A variety of options exist for how any implementation might schedule pruning of data, and there are no requirements around how this is implemented. The only requirement is merely that the time guarantees around data within the availability window are properly respected, and that data availability nodes correctly advertise themselves to peers.

An example implementation of storage pruning (WIP at time of writing) in celestia node

Security Considerations

As discussed in Rationale, data availability sampling light nodes need to at least sample data within the Tendermint weak subjectivity period of 21 days in order to independently verify the data availability of the chain. 30 days of seconds (2592000 seconds) exceeds this.

Copyright

Copyright and related rights waived via CC0.

| cip | 5 |

|---|---|

| title | Rename data availability to data publication |

| description | Renaming data availability to data publication to better reflect the message |

| author | msfew (@fewwwww) [email protected], Kartin [email protected], Xiaohang Yu (@xhyumiracle) |

| discussions-to | https://forum.celestia.org/t/informational-cip-rename-data-availability-to-data-publication/1287 |

| status | Review |

| type | Informational |

| created | 2023-11-06 |

Abstract

The term data availability isn’t as straightforward as it should be and could lead to misunderstandings within the community. To address this, this CIP proposes replacing data availability with data publication.

Motivation

The term data availability has caused confusion within the community due to its lack of intuitive clarity. For instance, in Celestia’s Glossary, there isn’t a clear definition of data availability; instead, it states that data availability addresses the question of whether this data has been published. Additionally, numerous community members have misinterpreted data availability as meaning data storage.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

The term data availability is RECOMMENDED to be renamed to data publication.

Data availability in existing works, such as research papers and docs, and cohesive terms, such as data availability sampling, MAY retain the existing wording.

Rationale

Motivations:

Data publicationis the act of making data publicly accessible. In Celestia’s context, it means the block data was actually published to the network and ready to be accessed, downloaded, and verified. This aligns more precisely with the intended meaning, which revolves around whether data has been published.- The community already favors and commonly uses the term

data publication. Data publicationmaintains a similar structure todata availability, making it easier for those familiar with the latter term to transition.

Alternative designs:

Proof of publication: While intuitive, it differs in structure fromdata availabilityand may be too closely associated with terms likeproof of work, potentially causing confusion within consensus-related mechanisms.Data availability proof: While logically coherent, it may create issues when used in conjunction with other terms, as the emphasis falls on “proof”. For instance, “verify a rollup’s data availability” and “verify a rollup’s data availability proof” might not refer to the same concept.Data caching: While indicative of the intended time frame of the existence of proof of publication, the term “caching” is not widely adopted within the context of blockchain networks.

Copyright

Copyright and related rights waived via CC0.

| cip | 6 |

|---|---|

| title | Mininum gas price enforcement |

| description | Enforce payment of the gas for a transaction based on a governance modifiable global minimum gas price |

| author | Callum Waters (@cmwaters) |

| discussions-to | https://forum.celestia.org/t/cip-006-price-enforcement/1351 |

| status | Final |

| type | Standards Track |

| category | Core |

| created | 2023-11-30 |

Abstract

Implement a global, consensus-enforced minimum gas price on all transactions. Ensure that all transactions can be decoded and have a valid signer with sufficient balance to cover the cost of the gas allocated in the transaction. The minimum gas price can be modified via on-chain governance.

| Parameter | Default | Summary | Changeable via Governance |

|---|---|---|---|

| minfee.MinimumGasPrice | 0.000001 utia | Globally set minimum price per unit of gas | True |

Motivation

The Celestia network was launched with the focus on having all the necessary protocols in place to provide secure data availability first and to focus on building an effective fee market system that correctly captures that value afterwards.

This is not to say that no fee market system exists. Celestia inherited the default system provided by the Cosmos SDK. However, the present system has several inadequacies that need to be addressed in order to achieve better pricing certainty and transaction guarantees for it’s users and to find a “fair” price for both the sellers (validators) and buyers (rollups) of data availability.

This proposal should be viewed as a foundational component of a broader effort and thus its scope is strictly focused towards the enforcement of some minimum fee: ensuring that the value captured goes to those that provided that value. It does not pertain to actual pricing mechanisms, tipping, refunds, futures and other possible future works. Dynamic systems like EIP-1559 and uniform price auctions can and should be prioritised only once the network starts to experience congestion over block space.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

All transactions MUST be decodable by the network. They MUST have a recognised signer, a signature that authenticates the signer, a fee and a gas. This does not imply that they need be completely valid, they just need a minimum degree of validity that allows the network to charge the signer for the allocation of gas for performing the computation, networking and storage.

We define the gas price as the fee divided by the gas. In other words, this is the amount of utia paid per unit of gas. All transactions MUST have a gas price that is greater than or equal to a minimum network-wide agreed upon gas price.

Both of these rules are block validity rules. Correct validators will vote nil or against the proposal if the proposed block contains any transaction that violates these two rules.

The global minimum gas price can be modified via on-chain governance.

Note that validators may in addition set their own constraints as to what they deem acceptable in a proposed block. For example, they may only accept transaction with a gas-price that is higher than their locally configured minimum.

This minimum gas price SHOULD be queryable by client implementations.

Rationale

The primary rationale for this decision is to prevent the payment system for data availability from migrating off-chain and manifesting in secondary markets. As a concrete example, currently validators would earn more revenue if they convinced users to pay them out of band and set the transaction fee to 0 such that all revenue went to the proposer and none to the rest of the validators/delegators. This is known as off-chain agreements (OCA)

There are two other reasons:

- Better UX as clients or wallets can query the on-chain state for the global min gas price whereas currently each node might have a separate min gas price and given the proposer is anonymous it’s difficult to know whether the user is paying a sufficient fee.

- Easier to coordinate: a governance proposal that is passed automatically updates the state machine whereas to manually change requires telling all nodes to halt, modify their config, and restart their node

Lastly, this change removes the possible incongruity that would form when it comes to gossiping transactions when consensus nodes use different minimum gas prices.

The minimum gas price defaults to a neglible value (0.000001) because this is the minimum gas price that would result in a tx priority >= 1 given the current tx prioritization implementation.

$gasPrice = fee / gas$

$priority = fee * priorityScalingFactor / gas$

$priority = gasPrice * priorityScalingFactor$

Note priorityScalingFactor is currently 1,000,000.

Backwards Compatibility

This requires a modification to the block validity rules and thus breaks the state machine. It will need to be introduced in a major release.

Wallets and other transaction submitting clients will need to monitor the minimum gas price and adjust accordingly.

Test Cases

The target for testing will be to remove the ability for block proposers to offer block space to users in a way that circumvents the fee system currently in place.

Reference Implementation

In order to ensure transaction validity with respect to having a minimum balance to cover the gas allocated, the celestia-app go implementation requires a small change to ProcessProposal, namely:

sdkTx, err := app.txConfig.TxDecoder()(tx)

if err != nil {

- // we don't reject the block here because it is not a block validity

- // rule that all transactions included in the block data are

- // decodable

- continue

+ return reject()

}

This will now reject undecodable transactions. Decodable transactions will still have to pass the AnteHandlers before being accepted in a block so no further change is required.

A mechanism to enforce a minimum fee is already in place in the DeductFeeDecorator. Currently, the decorator uses a min gas price sourced from the validator’s local config. To introduce a network-wide constraint on min gas price, we introduce a new param.Subspace=minfee, which contains the global min gas price. If the param is unpopulated, it defaults to 0.002utia (which matches the current local min gas price).

The DeductFeeDecorator antehandler will receive a new ante.TxFeeChecker function called ValidateTxFee which will have access to the same param.Subspace. For CheckTx, it will use the max of either the global min gas price or the local min gas price. For PrepareProposal, ProcessProposal and DeliverTx it will only check using the global min gas price and ignore the locally set min gas price.

The minimum gas price can already be queried through the gRPC client as can any other parameter.

Security Considerations

Any modification to the block validity rules (through PrepareProposal and ProcessProposal) introduces implementation risk that may cause the chain to halt.

Given a voting period of one week, it will take at least one week for the network to update the minimum gas price. This could potentially be too slow given large swings in the underlying price of TIA.

Copyright

Copyright and related rights waived via CC0.

| cip | 7 |

|---|---|

| title | Managing Working Groups in the Celestia Improvement Proposal Process |

| description | A guide to effectively managing working groups within the Celestia Improvement Proposal process. |

| author | Yaz Khoury [email protected] |

| discussions-to | https://forum.celestia.org/t/cip-for-working-group-best-practices/1343 |

| status | Draft |

| type | Informational |

| created | 2023-11-29 |

Abstract

This document provides a detailed guide for managing working groups within the Celestia Improvement Proposal (CIP) process. It draws from best practices in organizations like the IETF, focusing on the formation, management, and closure of working groups, ensuring their alignment with the overarching goals of the Celestia network.

Motivation

The successful implementation of the CIP process requires a structured approach to managing working groups. These groups are pivotal in addressing various aspects of Celestia’s core protocol and ecosystem. Effective management ensures collaborative progress and helps align group outputs with Celestia’s broader objectives.

Specification

1. Formation of Working Groups

- Identify key areas needing attention.

- Announce formation and invite participation.

- Appoint chairs or leaders.

2. Defining Scope and Goals

- Draft a charter for each group.

- Set realistic and measurable goals.

3. Establishing Procedures

- Decide on a consensus process (e.g. rough consensus)

- Schedule regular meetings.

- Implement documentation and reporting systems for each meeting.

4. Collaboration and Communication

- Utilize tools like GitHub, Slack, Telegram and video conferencing.

- Foster an environment of open communication.

5. Conflict Resolution

- Establish a conflict resolution mechanism.

- Define the role of chairs in conflict management.

6. Review and Adaptation

- Regularly review progress.

- Adapt scope or processes as needed.

7. Integration with the Larger Process

- Ensure alignment with the overall CIP process.

- Create a feedback loop with the community.

8. Closure

- Define criteria for completion.

- Document and share outcomes.

- Conduct a retrospective.

Rationale

The rationale for this approach is based on established practices in standardization bodies. By applying these methods, Celestia can ensure that its working groups are productive, inclusive, and effectively contribute to the network’s development.

Security Considerations

The management of working groups primarily involves process and communication security. Ensuring transparent and secure communication channels and documenting management practices is essential.

Copyright

Copyright and related rights waived via CC0.

| cip | 8 |

|---|---|

| title | Roles and Responsibilities of Working Group Chairs in the CIP Process |

| description | Outlining the key roles and responsibilities of working group chairs within the Celestia Improvement Proposal process. |

| author | Yaz Khoury [email protected] |

| discussions-to | https://forum.celestia.org/t/cip-for-wg-chair-responsibilities/1344 |

| status | Draft |

| type | Informational |

| created | 2023-11-29 |

Abstract

This document details the roles and responsibilities of working group chairs in the Celestia Improvement Proposal (CIP) process. Inspired by best practices in standardization processes like Ethereum’s EIP and the IETF, it provides a comprehensive guide on chair duties ranging from facilitation of discussions to conflict resolution.

Motivation

Effective leadership within working groups is crucial for the success of the CIP process. Chairs play a pivotal role in guiding discussions, driving consensus, and ensuring that the groups’ efforts align with Celestia’s broader goals. This document aims to establish clear guidelines for these roles to enhance the efficiency and productivity of the working groups.

Specification

Roles of Working Group Chairs

- Facilitate Discussions: Chairs should ensure productive, focused, and inclusive meetings.

- Drive Consensus: Guide the group towards consensus and make decisions when necessary.

- Administrative Responsibilities: Oversee meeting scheduling, agenda setting, and record-keeping.

- Communication Liaison: Act as a bridge between the working group and the wider community, ensuring transparency and effective communication.

- Guide Work Forward: Monitor progress and address challenges, keeping the group on track with its goals.

- Ensure Adherence to Process: Uphold the established CIP process and guide members in following it.

- Conflict Resolution: Actively manage and resolve conflicts within the group.

- Reporting and Accountability: Provide regular reports on progress and be accountable for the group’s achievements.

Rationale

Drawing inspiration from established practices in bodies like the IETF and Ethereum’s EIP, this proposal aims to create a structured and effective approach to managing working groups in Celestia. Clear definition of chair roles will facilitate smoother operation, better decision-making, and enhanced collaboration within the groups.

Security Considerations

The primary security considerations involve maintaining the confidentiality and integrity of discussions and decisions made within the working groups. Chairs should ensure secure communication channels and safeguard sensitive information related to the group’s activities.

Copyright

Copyright and related rights waived via CC0.

| cip | 9 |

|---|---|

| title | Packet Forward Middleware |

| description | Adopt Packet Forward Middleware for multi-hop IBC and path unwinding |

| author | Alex Cheng (@akc2267) |

| discussions-to | https://forum.celestia.org/t/cip-packet-forward-middleware/1359 |

| status | Final |

| type | Standards Track |

| category | Core |

| created | 2023-12-01 |

Abstract

This CIP integrates Packet Forward Middleware, the IBC middleware that enables multi-hop IBC and path unwinding to preserve fungibility for IBC-transferred tokens.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

The packet-forward-middleware is an IBC middleware module built for Cosmos blockchains utilizing the IBC protocol. A chain which incorporates the packet-forward-middleware is able to route incoming IBC packets from a source chain to a destination chain.

- Celestia MUST import and integrate Packet Forward Middleware.

- This integration SHOULD use defaults for the following configs: [

Retries On Timeout,Timeout Period,Refund Timeout,Fee Percentage].- Retries On Timeout - how many times will a forward be re-attempted in the case of a timeout.

- Timeout Period - how long can a forward be in progress before giving up.

- Refund Timeout - how long can a forward be in progress before issuing a refund back to the original source chain.

- Fee Percentage - % of the forwarded packet amount which will be subtracted and distributed to the community pool.

- Celestia MAY choose different values for these configs if the community would rather have auto-retries, different timeout periods, and/or collect fees from forwarded packets.

Rationale

The defaults set in Packet Forward Middleware ensure sensible timeouts so user funds are returned in a timely manner after incomplete transfers. Timeout follows IBC defaults and Refund Timeout is 28 days to ensure funds don’t remain stuck in the packet forward module. Retries On Timeout is defaulted to 0, as app developers or cli users may want to control this themselves. Fee Percentage is defaulted to 0 for superior user experience; however, the Celestia community may decide to collect fees as a revenue source.

Backwards Compatibility

No backward compatibility issues found.

Reference Implementation

The integration steps include the following:

- Import the PFM, initialize the PFM Module & Keeper, initialize the store keys and module params, and initialize the Begin/End Block logic and InitGenesis order.

- Configure the IBC application stack (including the transfer module).

- Configuration of additional options such as

timeout period, number ofretries on timeout,refund timeoutperiod, andfee percentage.

Integration of the PFM should take approximately 20 minutes.

Example integration of the Packet Forward Middleware

// app.go

// Import the packet forward middleware

import (

"github.com/cosmos/ibc-apps/middleware/packet-forward-middleware/v7/packetforward"

packetforwardkeeper "github.com/cosmos/ibc-apps/middleware/packet-forward-middleware/v7/packetforward/keeper"

packetforwardtypes "github.com/cosmos/ibc-apps/middleware/packet-forward-middleware/v7/packetforward/types"

)

...

// Register the AppModule for the packet forward middleware module

ModuleBasics = module.NewBasicManager(

...

packetforward.AppModuleBasic{},

...

)

...

// Add packet forward middleware Keeper

type App struct {

...

PacketForwardKeeper *packetforwardkeeper.Keeper

...

}

...

// Create store keys

keys := sdk.NewKVStoreKeys(

...

packetforwardtypes.StoreKey,

...

)

...

// Initialize the packet forward middleware Keeper

// It's important to note that the PFM Keeper must be initialized before the Transfer Keeper

app.PacketForwardKeeper = packetforwardkeeper.NewKeeper(

appCodec,

keys[packetforwardtypes.StoreKey],

app.GetSubspace(packetforwardtypes.ModuleName),

app.TransferKeeper, // will be zero-value here, reference is set later on with SetTransferKeeper.

app.IBCKeeper.ChannelKeeper,

appKeepers.DistrKeeper,

app.BankKeeper,

app.IBCKeeper.ChannelKeeper,

)

// Initialize the transfer module Keeper

app.TransferKeeper = ibctransferkeeper.NewKeeper(

appCodec,

keys[ibctransfertypes.StoreKey],

app.GetSubspace(ibctransfertypes.ModuleName),

app.PacketForwardKeeper,

app.IBCKeeper.ChannelKeeper,

&app.IBCKeeper.PortKeeper,

app.AccountKeeper,

app.BankKeeper,

scopedTransferKeeper,

)

app.PacketForwardKeeper.SetTransferKeeper(app.TransferKeeper)

// See the section below for configuring an application stack with the packet forward middleware

...

// Register packet forward middleware AppModule

app.moduleManager = module.NewManager(

...

packetforward.NewAppModule(app.PacketForwardKeeper),

)

...

// Add packet forward middleware to begin blocker logic

app.moduleManager.SetOrderBeginBlockers(

...

packetforwardtypes.ModuleName,

...

)

// Add packet forward middleware to end blocker logic

app.moduleManager.SetOrderEndBlockers(

...

packetforwardtypes.ModuleName,

...

)

// Add packet forward middleware to init genesis logic

app.moduleManager.SetOrderInitGenesis(

...

packetforwardtypes.ModuleName,

...

)

// Add packet forward middleware to init params keeper

func initParamsKeeper(appCodec codec.BinaryCodec, legacyAmino *codec.LegacyAmino, key, tkey storetypes.StoreKey) paramskeeper.Keeper {

...

paramsKeeper.Subspace(packetforwardtypes.ModuleName).WithKeyTable(packetforwardtypes.ParamKeyTable())

...

}

Configuring the transfer application stack with Packet Forward Middleware

Here is an example of how to create an application stack using transfer and packet-forward-middleware.

The following transferStack is configured in app/app.go and added to the IBC Router.

The in-line comments describe the execution flow of packets between the application stack and IBC core.

For more information on configuring an IBC application stack see the ibc-go docs

// Create Transfer Stack

// SendPacket, since it is originating from the application to core IBC:

// transferKeeper.SendPacket -> packetforward.SendPacket -> channel.SendPacket

// RecvPacket, message that originates from core IBC and goes down to app, the flow is the other way

// channel.RecvPacket -> packetforward.OnRecvPacket -> transfer.OnRecvPacket

// transfer stack contains (from top to bottom):

// - Packet Forward Middleware

// - Transfer

var transferStack ibcporttypes.IBCModule

transferStack = transfer.NewIBCModule(app.TransferKeeper)

transferStack = packetforward.NewIBCMiddleware(

transferStack,

app.PacketForwardKeeper,

0, // retries on timeout

packetforwardkeeper.DefaultForwardTransferPacketTimeoutTimestamp, // forward timeout

packetforwardkeeper.DefaultRefundTransferPacketTimeoutTimestamp, // refund timeout

)

// Add transfer stack to IBC Router

ibcRouter.AddRoute(ibctransfertypes.ModuleName, transferStack)

Configurable options in the Packet Forward Middleware

The Packet Forward Middleware has several configurable options available when initializing the IBC application stack.

You can see these passed in as arguments to packetforward.NewIBCMiddleware and they include the number of retries that

will be performed on a forward timeout, the timeout period that will be used for a forward, and the timeout period that

will be used for performing refunds in the case that a forward is taking too long.

Additionally, there is a fee percentage parameter that can be set in InitGenesis, this is an optional parameter that

can be used to take a fee from each forwarded packet which will then be distributed to the community pool. In the

OnRecvPacket callback ForwardTransferPacket is invoked which will attempt to subtract a fee from the forwarded

packet amount if the fee percentage is non-zero.

Retries On Timeout: how many times will a forward be re-attempted in the case of a timeout.Timeout Period: how long can a forward be in progress before giving up.Refund Timeout: how long can a forward be in progress before issuing a refund back to the original source chain.Fee Percentage: % of the forwarded packet amount which will be subtracted and distributed to the community pool.

Test Cases

The targets for testing will be:

- Successful path unwinding from gaia-testnet-1 to celestia-testnet to gaia-testnet-2

- Proper refunding in a multi-hop IBC flow if any step returns a

recv_packeterror - Ensure

Retries On Timeoutconfig works, with the intended number of retry attempts upon hitting theTimeout Period - Ensure

Refund Timeoutissues a refund when a forward is in progress for too long - If

Fee Percentageis not set to 0, ensure the proper token amount is claimed from packets and sent to the Community Pool.

Security Considerations

The origin sender (sender on the first chain) is retained in case of a failure to receive the packet (max-timeouts or ack error) on any chain in the sequence, so funds will be refunded to the right sender in the case of an error.

Any intermediate receivers, though, are not used anymore. PFM will receive the funds into the hashed account (hash of sender from previous chain + channel received on the current chain). This gives a deterministic account for the origin sender to see events on intermediate chains. With PFM’s atomic acks, there is no possibility of funds getting stuck on an intermediate chain, they will either make it to the final destination successfully, or be refunded back to the origin sender.

We recommend that users set the intermediate receivers to a string such as “pfm” (since PFM does not care what the intermediate receiver is), so that in case users accidentally send a packet intended for PFM to a chain that does not have PFM, they will get an ack error and refunded instead of funds landing in the intermediate receiver account. This results in a PFM detection mechanism with a graceful error.

Copyright

Copyright and related rights waived via CC0.

| cip | 10 |

|---|---|

| title | Coordinated network upgrades |

| description | Protocol for coordinating major network upgrades |

| author | Callum Waters (@cmwaters) |

| discussions-to | https://forum.celestia.org/t/cip-coordinated-network-upgrades/1367 |

| status | Final |

| type | Standards Track |

| category | Core |

| created | 2023-12-07 |

Abstract

Use a pre-programmed height for the next major upgrade. Following major upgrades will use an in-protocol signalling mechanism. Validators will submit messages to signal their ability and preference to use the next version. Once a quorum of 5/6 has signalled the same version, the network will migrate to that version.

Motivation

The Celestia network needs to be able to upgrade across different state machines so new features and bugs that are protocol breaking can be supported. Versions of the Celestia consensus node are required to support all prior state machines, thus nodes are able to upgrade any time ahead of the coordinated upgrade height and very little downtime is experienced in transition. The problem addressed in this CIP is to define a protocol for coordinating that upgrade height.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

The next upgrade will be coordinated using a hard coded height that is passed as a flag when the node commences. This is an exception necessary to introduce the upgrading protocol which will come into affect for the following upgrade.

The network introduces two new message types MsgSignalVersion and MsgTryUpgrade

message MsgSignalVersion {

string validator_address = 1;

uint64 version = 2;

}

message MsgTryUpgrade { string signer = 1; }

Only validators can submit MsgSignalVersion. The Celestia state machine tracks which version each validator has signalled for. The signalled version MUST either be the same version or the next. There is no support for skipping versions or downgrading.

Clients may query the tally for each version as follows:

message QueryVersionTallyRequest { uint64 version = 1; }

message QueryVersionTallyResponse {

uint64 voting_power = 1;

uint64 threshold_power = 2;

uint64 total_voting_power = 3;

}

When voting_power is greater or equal to theshold_power , the network MAY upgrade. This is done through a “crank” transaction, MsgTryUpgrade, which can be submitted by any account. The account covers the gas required for the calculation. If the quorum is met, the chain will update the AppVersion in the ConsensusParams returned in EndBlock. Celestia will reset the tally and perform all necessary migrations at the end of processing that block in Commit. The proposer of the following height will include the new version in the block. As is currently, nodes will only vote for blocks that match the same network version as theirs.

If the network agrees to move to a version that is not supported by the node, the node will gracefully shutdown.

The threshold_power is calcualted as 5/6ths of the total voting power. Rationale is provided below.

Rationale

When attempting a major upgrade, there is increased risk that the network halts. At least 2/3 in voting power of the network is needed to migrate and agree upon the next block. However this does not take into account the actions of byzantine validators. As an example, say there is 100 in total voting power. 66 have signalled v2 and 33 have yet to signal i.e. they are still signalling v1. It takes 1 byzantine voting power to pass the threshold, convincing the network to propose v2 blocks and then omitting their vote leaving the network failing to reach consensus until one of the 33 are able to upgrade. At the other end of the spectrum, increasing the necessary threshold means less voting power required to veto the upgrade. The middle point here is setting a quorum of 5/6ths which provides 1/6 byzantine fault tolerance to liveness and requiring at least 1/6th of the network to veto the upgrade.

Validators are permitted to signal for the current network version as a means of cancelling their prior decision to upgrade. This is important in the case that new information arises that convinces the network that the next version is not ready.

An on-chain tallying system was decided over an off-chain as it canonicalises the information which is important for coordination and adds accountability to validators. As a result of this decision, validators will need to pay fees to upgrade which is necessary to avoid spamming the chain.

Backwards Compatibility

This feature modifies the functionality of the state machine in a breaking way as the state machine can now dictate version changes. This will require a major upgrade to implement (thus the protocol won’t come into affect until the following major upgrade).

As the API is additive, there is no need to consider backwards compatibility for clients.

Test Cases

All implementations are advised to test the following scenarios:

- A version x node can run on a version y network where x >= y.

- A

MsgTryUpgradeshould not modify the app version if there has been less than 5/6th and set the new app version when that threshold has been reached. - A version x node should gracefully shutdown and not continue to validate blocks on a version y network when y > x.

MsgSignalshould correctly tally the accounts voting power. Signalling multiple times by the same validator should not increase the tally. A validator should be able to resignal a different version at any time.

Reference Implementation

The golang implementation of the signal module can be found here

Security Considerations

See the section on rationale for understanding the network halting risk.

Copyright

Copyright and related rights waived via CC0.

| cip | 11 |

|---|---|

| title | Refund unspent gas |

| description | Refund allocated but unspent gas to the transaction fee payer. |

| author | Rootul Patel (@rootulp) |

| discussions-to | https://forum.celestia.org/t/cip-refund-unspent-gas/1374 |

| status | Withdrawn |

| withdrawal-reason | The mitigation strategies for the security considerations were deemed too complex. |

| type | Standards Track |

| category | Core |

| created | 2023-12-07 |

Abstract

Refund allocated but unspent gas to the transaction fee payer.

Motivation

When a user submits a transaction to Celestia, they MUST specify a gas limit. Regardless of how much gas is consumed in the process of executing the transaction, the user is always charged a fee based on their transaction’s gas limit. This behavior is not ideal because it forces users to accurately estimate the amount of gas their transaction will consume. If the user underestimates the gas limit, their transaction will fail to execute. If the user overestimates the gas limit, they will be charged more than necessary.

Specification

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 and RFC 8174.

Consider adding a posthandler that:

- Disables the gas meter so that the following operations do not consume gas or cause an out of gas error.

- Calculate the amount of coins to refund:

- Calculate the transaction’s

gasPricebased on the equationgasPrice = fees / gasLimit. - Calculate the transaction’s fee based on gas consumption:

feeBasedOnGasConsumption = gasPrice * gasConsumed. - Calculate the amount to refund:

amountToRefund = fees - feeBasedOnGasConsumption.

- Calculate the transaction’s

- Determine the refund recipient:

- If the transaction had a fee granter, refund to the fee granter.

- If the transaction did not have a fee granter, refund to the fee payer.

- Refund coins to the refund recipient. Note: the refund is sourced from the fee collector module account (the account that collects fees from transactions via the

DeductFeeDecoratorantehandler).

Rationale

The entire fee specified by a transaction is deducted via an antehandler (DeductFeeDecorator) prior to execution. Since the transaction hasn’t been executed yet, the antehandler does not know how much gas the transaction will consume and therefore can’t accurately calculate a fee based on gas consumption. To avoid underestimating the transaction’s gas consumption, the antehandler overcharges the fee payer by deducting the entire fee.

This proposal suggests adding a posthandler that refunds a portion of the fee for the unspent gas back to the fee payer. At the time of posthanlder execution, the gas meter reflects the true amount of gas consumed during execution. As a result, it is possible to accurately calculate the amount of fees that the transaction would be charged based on gas consumption.

The net result of the fee deduction in the antehandler and the unspent gas refund in the posthandler is that user’s will observe a fee that is based on gas consumption (gasPrice * gasConsumed) rather than based on gas limit (gasPrice * gasLimit).

Backwards Compatibility

This proposal is backwards-incompatible because it is state-machine breaking. Put another way, state machines with this proposal will process transactions differently than state machines without this proposal. Therefore, this proposal cannot be introduced without an app version bump.

Test Cases

TBA

Reference Implementation

https://github.com/celestiaorg/celestia-app/pull/2887

Security Considerations

DoS attack